Project-K: Local AI Voice Assistant

Summary

Commercial voice assistants like Amazon Alexa and Google Home are data-hungry, collecting information with every interaction, and often lack the contextual awareness needed for a natural conversation flow. Project-K is a locally running AI assistant built on a Raspberry Pi base, designed to offer better humanization in conversation while remaining strictly private compared to commercial solutions. It investigates the feasibility of fully local AI on edge hardware without reliance on the cloud.

Dataset and Features

This project emphasizes system integration over custom model training. It utilizes robust pretrained models to function on low-power hardware:

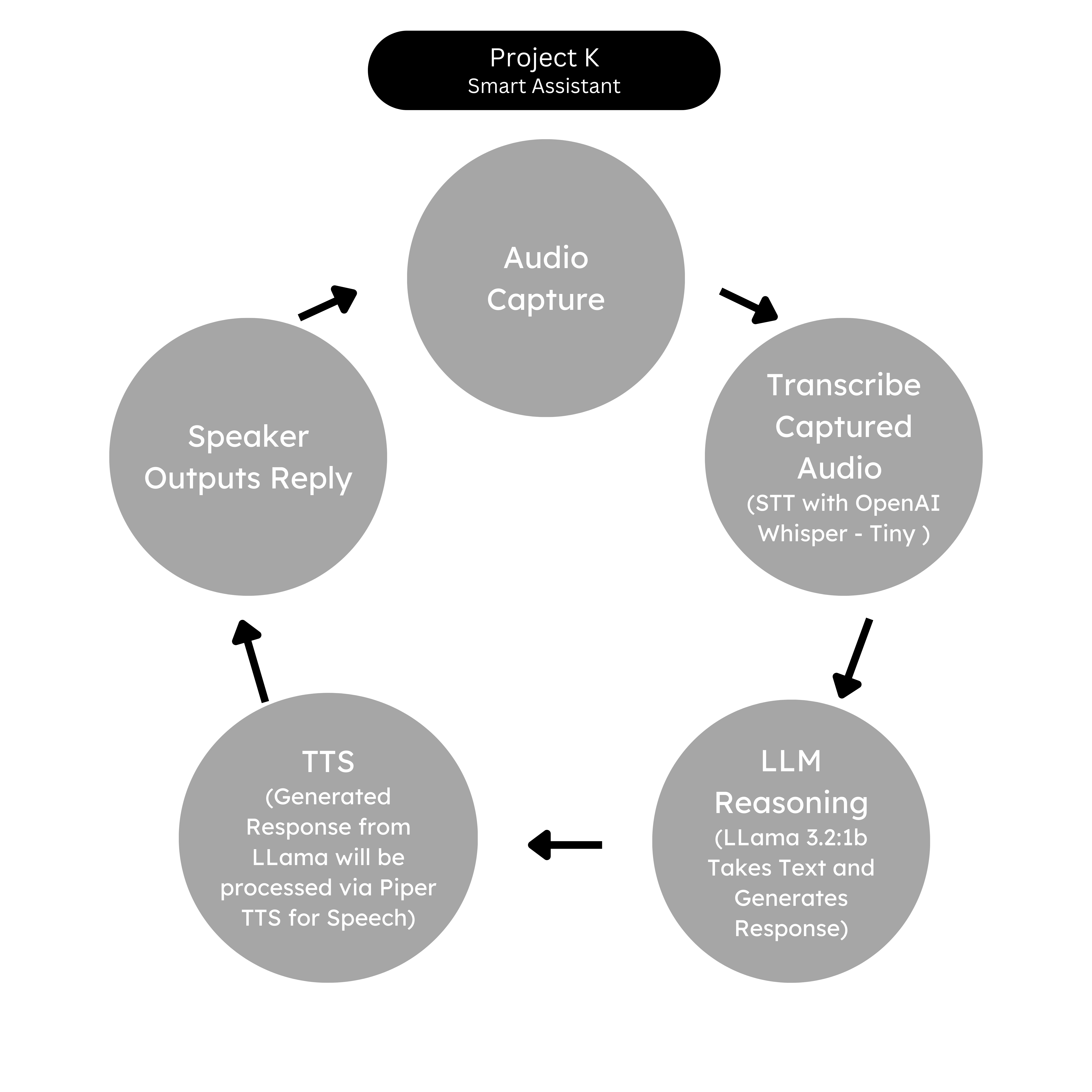

- Speech-to-Text: OpenAI's Whisper (Tiny), pretrained on diverse multilingual datasets, handles the audio transcription.

- LLM Reasoning: Meta's Llama 3.2 (1B parameters) acts as the brain, selected for its balance of reliability and energy efficiency.

- Text-to-Speech: Piper TTS provides the spoken output, chosen specifically for its ability to run fully offline on devices without a GPU.

The Pipeline

The system operates on a continuous loop of audio capture, transcription, reasoning, and synthesis.

The end-to-end loop processing audio input through Whisper, Llama, and Piper.

Discussion

The results from Project-K demonstrate that a fully local voice assistant is feasible on edge AI hardware, though it comes with distinct trade-offs. While the system excels in privacy and contextual retention—outperforming Google Home in multi-turn conversations—it faces latency challenges.

The average response latency was approximately 15.4 seconds, primarily due to the CPU spikes (up to 99%) during LLM inference on the Raspberry Pi. Despite the slower speeds compared to cloud-based alternatives, Project-K proves that privacy-conscious users can utilize smart assistants without ceding data control to big tech companies.